Home / Publications / E-library page

You are currently logged in as an

Institutional Subscriber.

If you would like to logout,

please click on the button below.

Home / Publications / E-library page

Only AES members and Institutional Journal Subscribers can download

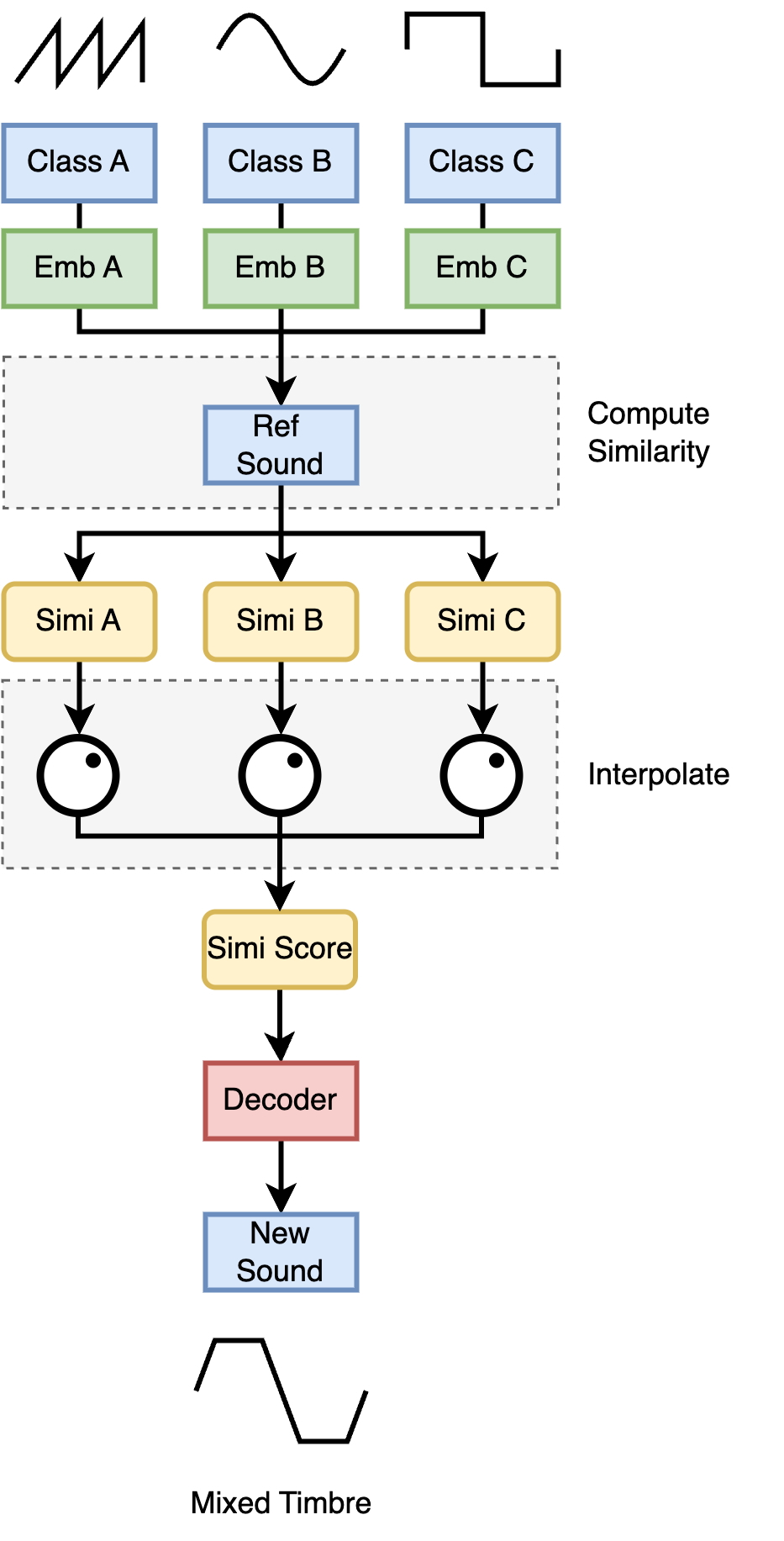

Generating sound effects with controllable variations is a challenging task, traditionally addressed using sophisticated physical models that require in-depth knowledge of signal processing parameters and algorithms. In the era of generative and large language models, text has emerged as a common, human-interpretable interface for controlling sound synthesis. However, the discrete and qualitative nature of language tokens makes it difficult to capture subtle timbral variations across different sounds. In this research, the authors propose a novel similarity-based conditioning method for sound synthesis, leveraging differentiable digital signal processing. This approach combines the use of latent space for learning and controlling audio timbre with an intuitive guiding vector, normalized within the range [0, 1], to encode categorical acoustic information. By utilizing pretrained audio representation models, this method achieves expressive and fine-grained timbre control. To benchmark their approach, the authors introduce two sound effect datasets—Footstep-set and Impact-set—designed to evaluate both controllability and sound quality. Regression analysis demonstrates that the proposed similarity score effectively controls timbre variations and enables creative applications such as timbre interpolation between discrete classes. This work provides a robust and versatile framework for sound effect synthesis, bridging the gap between traditional signal processing and modern machine learning techniques.

Author (s): Liu, Yunyi; Jin, Craig

Affiliation:

Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia; Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia

(See document for exact affiliation information.)

Publication Date:

2025-09-05

Import into BibTeX

Permalink: https://aes2.org/publications/elibrary-page/?id=22952

(944KB)

Click to purchase paper as a non-member or login as an AES member. If your company or school subscribes to the E-Library then switch to the institutional version. If you are not an AES member Join the AES. If you need to check your member status, login to the Member Portal.

Liu, Yunyi; Jin, Craig; 2025; A Similarity-Based Conditioning Method for Controllable Sound Effect Synthesis [PDF]; Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia; Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia; Paper ; Available from: https://aes2.org/publications/elibrary-page/?id=22952

Liu, Yunyi; Jin, Craig; A Similarity-Based Conditioning Method for Controllable Sound Effect Synthesis [PDF]; Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia; Computing and Audio Research Laboratory, Department of Electrical and Information Engineering, The University of Sydney, Sydney, Australia; Paper ; 2025 Available: https://aes2.org/publications/elibrary-page/?id=22952