Home / Publications / E-library page

You are currently logged in as an

Institutional Subscriber.

If you would like to logout,

please click on the button below.

Home / Publications / E-library page

Only AES members and Institutional Journal Subscribers can download

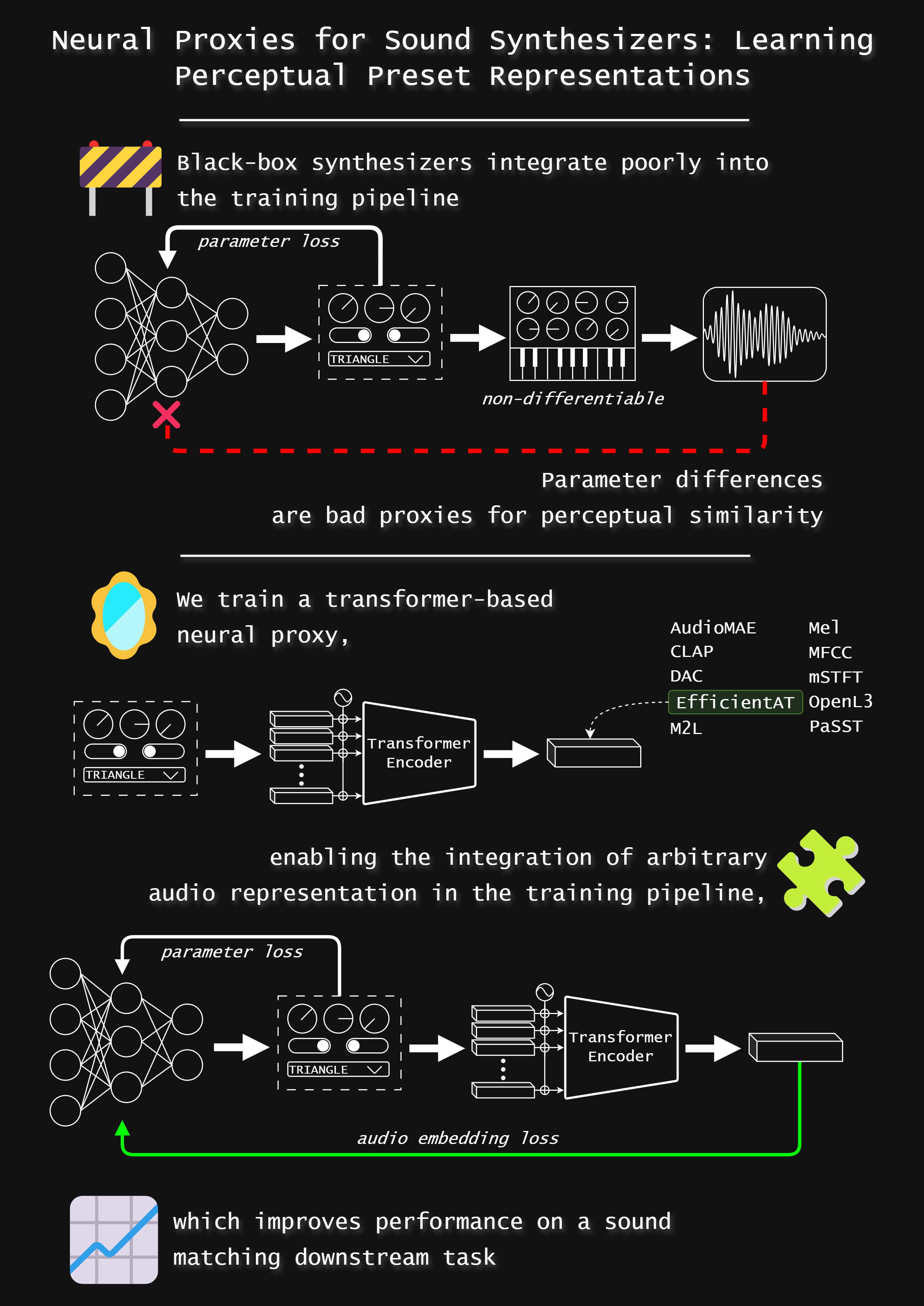

Deep learning appears as an appealing solution for automatic synthesizer programming (ASP), which aims to assist musicians and sound designers in programming sound synthesizers. However, integrating software synthesizers into training pipelines is challenging due to their potential nondifferentiability. This work tackles this challenge by introducing a method to approximate arbitrary synthesizers. Specifically, a neural network is trained to map synthesizer presets onto an audio embedding space derived from a pretrained model. This facilitates the definition of a neural proxy that produces compact yet effective representations, thereby enabling the integration of audio embedding loss into neural-based ASP systems for black-box synthesizers. The authors evaluate the representations derived by various pretrained audio models in the context of neural-based methods for ASP and assess the effectiveness of several neural network architectures, including feedforward, recurrent, and transformer-based models, in defining neural proxies. The proposed method is evaluated using both synthetic and handcrafted presets from three popular software synthesizers and assessed its performance in a synthesizer sound-matching downstream task. Although the benefits of the learned representation are nuanced by resource requirements, encouraging results were obtained for all synthesizers, paving the way for future research into the application of synthesizer proxies for neural-based ASP systems.

Author (s): Combes, Paolo; Weinzierl, Stefan; Obermayer, Klaus

Affiliation:

Audio Communication Group, TU Berlin, Berlin, Germany; Audio Communication Group, TU Berlin, Berlin, Germany; Neural Information Processing Group, TU Berlin, Berlin, Germany

(See document for exact affiliation information.)

Publication Date:

2025-09-05

Import into BibTeX

Permalink: https://aes2.org/publications/elibrary-page/?id=22956

(975KB)

Click to purchase paper as a non-member or login as an AES member. If your company or school subscribes to the E-Library then switch to the institutional version. If you are not an AES member Join the AES. If you need to check your member status, login to the Member Portal.

Combes, Paolo; Weinzierl, Stefan; Obermayer, Klaus; 2025; Neural Proxies for Sound Synthesizers: Learning Perceptually Informed Preset Representations [PDF]; Audio Communication Group, TU Berlin, Berlin, Germany; Audio Communication Group, TU Berlin, Berlin, Germany; Neural Information Processing Group, TU Berlin, Berlin, Germany; Paper ; Available from: https://aes2.org/publications/elibrary-page/?id=22956

Combes, Paolo; Weinzierl, Stefan; Obermayer, Klaus; Neural Proxies for Sound Synthesizers: Learning Perceptually Informed Preset Representations [PDF]; Audio Communication Group, TU Berlin, Berlin, Germany; Audio Communication Group, TU Berlin, Berlin, Germany; Neural Information Processing Group, TU Berlin, Berlin, Germany; Paper ; 2025 Available: https://aes2.org/publications/elibrary-page/?id=22956

@article{combes2025neural,

author={combes paolo and weinzierl stefan and obermayer klaus},

journal={journal of the audio engineering society},

title={neural proxies for sound synthesizers: learning perceptually informed preset representations},

year={2025},

volume={73},

issue={9},

pages={561-577},

month={october},}